Infrastructure as Code in Azure: Enterprise CI/CD Pipelines and Multi-Environment Automation

Part 3 of our Infrastructure as Code in Azure series, where we build a CI/CD pipeline with GitHub Actions, implement multi-environment strategies, and establish enterprise DevOps practices for scalable infrastructure automation.

Continuing our Infrastructure as Code in Azure series, this article elevates our Terraform and Ansible automation to enterprise-grade CI/CD pipelines. In Parts 1 and 2, we built the foundation with infrastructure provisioning and security hardening. Now, we’ll implement near-production-ready deployment workflows, multi-environment strategies, and basic testing frameworks that scale across development teams.

Modern DevOps requires more than just infrastructure automation, it demands reliable, auditable, and scalable deployment pipelines. This tutorial demonstrates how to transform our existing Terraform and Ansible codebase into a CI/CD system using GitHub Actions, complete with security scanning, approval workflows, and disaster recovery capabilities.

In this tutorial, you’ll learn how to:

- Build near-production-ready CI/CD pipelines using GitHub Actions for automated deployments

- Implement secure Azure authentication with OpenID Connect (OIDC) for passwordless workflows

- Create multi-environment strategies with isolated development, staging, and production deployments

- Establish approval workflows with Terraform plan reviews and environment promotion gates

- Integrate security scanning with automated vulnerability detection and compliance checking

- Implement basic testing frameworks for infrastructure validation and deployment verification

This practical guide demonstrates how to scale infrastructure automation from individual deployments to enterprise-grade DevOps practices. By the end, you’ll have a complete CI/CD system capable of managing complex, multi-environment infrastructure with the reliability and security standards required for production workloads.

Prerequisites and Current State

This tutorial builds upon Part 1: Infrastructure as Code in Azure – Introduction to Terraform and Part 2: Infrastructure as Code in Azure – Security Hardening and Configuration With Ansible.

To proceed, ensure you have:

- Completed Parts 1 and 2 with working Terraform and Ansible automation

- A GitHub repository containing your infrastructure code

- Azure CLI configured with appropriate subscription access

- Basic understanding of GitHub Actions and YAML syntax

The CI/CD pipelines in this guide assume your project structure matches the previous tutorials, with separated provisioning/ and configuration-management/ directories containing your Terraform and Ansible configurations respectively.

Enterprise Project Structure

Before implementing CI/CD pipelines, we need to restructure our project to support multi-environment deployments. This organization follows DevOps best practices by separating environments, implementing proper testing frameworks, and providing automation scripts.

Our enhanced project structure will look like this by projects end:

dt-series/

├── .github/

│ └── workflows/ # GitHub Actions CI/CD workflows

│ ├── pr-validation.yml # Pull request validation pipeline

│ ├── deploy-dev.yml # Development environment deployment

│ ├── deploy-staging.yml # Staging environment deployment

│ ├── deploy-production.yml # Production environment deployment

│ ├── disaster-recovery.yml # Disaster recovery workflow

│ └── environment-promotion.yml # Environment promotion pipeline

├── environments/ # Multi-environment configurations

│ ├── dev/

│ │ ├── terraform.tfvars # Development environment variables

│ │ ├── backend.tf # Development Terraform backend

│ │ └── ansible_vars.yml # Development Ansible variables

│ ├── staging/

│ │ ├── terraform.tfvars # Staging environment variables

│ │ ├── backend.tf # Staging Terraform backend

│ │ └── ansible_vars.yml # Staging Ansible variables

│ └── production/

│ ├── terraform.tfvars # Production environment variables

│ ├── backend.tf # Production Terraform backend

│ └── ansible_vars.yml # Production Ansible variables

├── tests/ # Basic testing framework

│ ├── pytest.ini # Infrastructure validation tests

│ ├── requirements.txt

│ ├── test_infrastructure.py # Infrastructure validation tests

│ ├── test_security.py # Security compliance tests

│ ├── test_smoke.py # Smoke tests

│ └── test_health.py # Production readiness tests

├── provisioning/ # Terraform infrastructure code (from Part 1)

├── configuration-management/ # Ansible configuration (from Part 2)

├── Makefile # Enhanced automation workflow

└── README.md # Updated project documentationGo ahead and create the required directory structure. Run the below command from project root:

mkdir -p tests/ environments/dev environments/staging environments/production .github/workflows/Github Environments Setup

Create GitHub environments for deployment protection by navigating to: Repository → Settings → Environments

Create these environments:

- development - No protection rules needed

- staging - No protection rules needed

- production - Select

Required reviewersand add yourself. SetWait timerto 0 minutes (or add delay if you want)

Note:

Required Reviewersonly appears on public repositories.

Service Principal for Github Actions

Before we can create our workflows, we will need to do some setup to get Github Actions working. Start by creating a service principal for Github Actions:

# Make sure you source your .env variables

source provisioning/.env

# Login to Azure

az login

# Set your subscription (replace with your subscription ID)

az account set --subscription ${ARM_SUBSCRIPTION_ID}

# Create service principal

az ad sp create-for-rbac --name "github-actions-sp" \

--role contributor \

--scopes /subscriptions/${ARM_SUBSCRIPTION_ID}You should see an appID output, set it as its own variable. Go ahead and fill in your github repo name and username as well:

APP_ID="your-app-id-from-previous-step"

# Get your GitHub repository details

# Replace: OWNER = your GitHub username/org, REPO = your repository name

GITHUB_OWNER="your-github-username" # username, not email. for example: aaronlmathis

GITHUB_REPO="your-repo-name"Now, let’s configure OIDC Federation:

# Create OIDC credential for main branch (production)

az ad app federated-credential create \

--id $APP_ID \

--parameters '{

"name": "github-main",

"issuer": "https://token.actions.githubusercontent.com",

"subject": "repo:'$GITHUB_OWNER'/'$GITHUB_REPO':ref:refs/heads/main",

"description": "GitHub Actions Main Branch",

"audiences": ["api://AzureADTokenExchange"]

}'

# Create OIDC credential for production environment

az ad app federated-credential create \

--id "$APP_ID" \

--parameters '{

"name": "github-production",

"issuer": "https://token.actions.githubusercontent.com",

"subject": "repo:'"$GITHUB_OWNER"'/'"$GITHUB_REPO"':environment:production",

"description": "GitHub Actions – production environment",

"audiences": ["api://AzureADTokenExchange"]

}'

# Create OIDC credential for staging environment

az ad app federated-credential create \

--id "$APP_ID" \

--parameters '{

"name": "github-staging",

"issuer": "https://token.actions.githubusercontent.com",

"subject": "repo:'"$GITHUB_OWNER"'/'"$GITHUB_REPO"':environment:staging",

"description": "GitHub Actions – staging environment",

"audiences": ["api://AzureADTokenExchange"]

}'

# Create OIDC credential for development environment

az ad app federated-credential create \

--id "$APP_ID" \

--parameters '{

"name": "github-development",

"issuer": "https://token.actions.githubusercontent.com",

"subject": "repo:'"$GITHUB_OWNER"'/'"$GITHUB_REPO"':environment:development",

"description": "GitHub Actions – development environment",

"audiences": ["api://AzureADTokenExchange"]

}'

# Create OIDC credential for develop branch

az ad app federated-credential create \

--id $APP_ID \

--parameters '{

"name": "github-develop",

"issuer": "https://token.actions.githubusercontent.com",

"subject": "repo:'$GITHUB_OWNER'/'$GITHUB_REPO':ref:refs/heads/develop",

"description": "GitHub Actions Develop Branch",

"audiences": ["api://AzureADTokenExchange"]

}'

# Create OIDC credential for pull requests

az ad app federated-credential create \

--id $APP_ID \

--parameters '{

"name": "github-pr",

"issuer": "https://token.actions.githubusercontent.com",

"subject": "repo:'$GITHUB_OWNER'/'$GITHUB_REPO':pull_request",

"description": "GitHub Actions Pull Requests",

"audiences": ["api://AzureADTokenExchange"]

}'Generate SSH Key

Let’s generate a new SSH key for Ansible to use:

# Generate SSH key pair

ssh-keygen -t rsa -b 4096 -f ~/.ssh/azure_vm_key -C "azure-vm-key"Configure Github Secrets

Go to your github repository Settings → Secrets and variables → Actions, and add:

AZURE_CLIENT_ID: The application (client) IDAZURE_TENANT_ID: Your Azure tenant IDAZURE_SUBSCRIPTION_ID: Your Azure subscription IDSSH_PRIVATE_KEY: Your SSH private key for VM access

You can get these values by running the following in the same terminal you just created your service principal in:

# Get the required values

echo "AZURE_CLIENT_ID: $APP_ID"

echo "AZURE_TENANT_ID: $(az account show --query tenantId -o tsv)"

echo "AZURE_SUBSCRIPTION_ID: $(az account show --query id -o tsv)"

echo "SSH_PRIVATE_KEY: $(cat ~/.ssh/azure_vm_key)"Setting Up Azure Storage for State Management

Enterprise CI/CD requires centralized Terraform state management with proper locking and encryption. We’ll configure Azure Storage for remote state storage across all environments. First let’s create storage accounts for each environment and generate environment-specific terraform config files.

Development Environment

Create the azure storage for development:

# Create resource group

az group create --name "rg-terraform-state-dev" --location "East US"

# Create storage account (name must be globally unique)

STORAGE_ACCOUNT_NAME="tfstatedev$(date +%s)"

az storage account create \

--resource-group "rg-terraform-state-dev" \

--name $STORAGE_ACCOUNT_NAME \

--sku Standard_LRS \

--encryption-services blob

# Create storage container

az storage container create \

--name "tfstate" \

--account-name $STORAGE_ACCOUNT_NAME

# Get storage account key

az storage account keys list \

--resource-group "rg-terraform-state-dev" \

--account-name $STORAGE_ACCOUNT_NAME \

--query '[0].value' -o tsvNow create environments/dev/backend.tf:

cat > environments/dev/backend.tf <<EOF

terraform {

backend "azurerm" {

resource_group_name = "rg-terraform-state-dev"

storage_account_name = "${STORAGE_ACCOUNT_NAME}"

container_name = "tfstate"

key = "dev.terraform.tfstate"

}

}

EOFStaging Environment

Create the azure storage for staging:

# Create resource group

az group create --name "rg-terraform-state-staging" --location "East US"

# Create storage account (name must be globally unique)

STORAGE_ACCOUNT_NAME="tfstatestaging$(date +%s)"

az storage account create \

--resource-group "rg-terraform-state-staging" \

--name $STORAGE_ACCOUNT_NAME \

--sku Standard_LRS \

--encryption-services blob

# Create storage container

az storage container create \

--name "tfstate" \

--account-name $STORAGE_ACCOUNT_NAME

# Get storage account key

az storage account keys list \

--resource-group "rg-terraform-state-staging" \

--account-name $STORAGE_ACCOUNT_NAME \

--query '[0].value' -o tsvNow create environments/staging/backend.tf:

cat > environments/staging/backend.tf <<EOF

terraform {

backend "azurerm" {

resource_group_name = "rg-terraform-state-staging"

storage_account_name = "${STORAGE_ACCOUNT_NAME}"

container_name = "tfstate"

key = "staging.terraform.tfstate"

}

}

EOFProduction Environment

Create the azure storage for production:

# Create resource group

az group create --name "rg-terraform-state-production" --location "East US"

# Create storage account (name must be globally unique)

STORAGE_ACCOUNT_NAME="tfstateprod$(date +%s)"

az storage account create \

--resource-group "rg-terraform-state-production" \

--name $STORAGE_ACCOUNT_NAME \

--sku Standard_LRS \

--encryption-services blob

# Create storage container

az storage container create \

--name "tfstate" \

--account-name $STORAGE_ACCOUNT_NAME

# Get storage account key

az storage account keys list \

--resource-group "rg-terraform-state-production" \

--account-name $STORAGE_ACCOUNT_NAME \

--query '[0].value' -o tsvNow create environments/production/backend.tf:

cat > environments/production/backend.tf <<EOF

terraform {

backend "azurerm" {

resource_group_name = "rg-terraform-state-production"

storage_account_name = "${STORAGE_ACCOUNT_NAME}"

container_name = "tfstate"

key = "production.terraform.tfstate"

}

}

EOFMulti-Environment Configuration Strategy

Enterprise deployments require environment-specific configurations that balance consistency with flexibility. Our approach uses parameterized configurations that adapt to different environments while maintaining infrastructure as code principles.

Each environment has its own configuration folder in the environments/ directory:

environments/dev/- Development environmentenvironments/staging/- Staging environmentenvironments/production/- Production environment

We have already created backend.tf files that tell Terraform how and where to store the remote state for each environment. These files configure the backend to use an Azure Storage Account, ensuring that the state is securely stored, locked, and shared across your team.

Each environment (dev, staging, production) has its own dedicated storage account and container, isolating state files and preventing accidental cross-environment changes. This setup enables safe collaboration, supports state locking to avoid concurrent modifications, and provides a clear audit trail for infrastructure changes in each environment.

What About Differences in Resources or Configurations?

To support environment-specific customization, we will create a terraform.tfvars file and an ansible_vars.yml file in each environment’s directory as well. The terraform.tfvars file allows you to define custom Terraform variables for each environment, such as resource names, sizes, locations, and access controls.

Similarly, the ansible_vars.yml file enables you to specify Ansible variables tailored to each environment’s configuration and security requirements. This approach ensures that each environment can have unique settings while maintaining a consistent project structure and automation workflow.

Development Environment Configuration

Development environments prioritize cost optimization and developer productivity create environments/dev/terraform.tfvars:

# Development Environment Configuration

resource_group_name = "rg-terraform-state-dev"

environment = "dev"

location = "East US"

vm_name = "dev-vm"

vm_size = "Standard_B1s"

admin_username = "azureuser"

ssh_allowed_cidr = "0.0.0.0/0" # More permissive for dev

vnet_name = "dev-vnet"

vnet_address_space = "10.0.0.0/16"

subnet_name = "dev-subnet"

subnet_prefix = "10.0.1.0/24"

budget_amount = 50

budget_threshold_percentage = 80

alert_emails = ["[email protected]"]

ssh_public_key_path = "keys/id_rsa.pub"Development-specific Ansible variables enable relaxed security for easier testing, create environments/dev/ansible_vars.yml:

# Development environment variables

environment_name: "dev"

ssh_port: 22

fail2ban_maxretry: 5 # More lenient for dev

fail2ban_bantime: 1800 # Shorter ban time for dev

fail2ban_findtime: 300

# System configuration

timezone: "UTC"

ntp_servers:

- 0.pool.ntp.org

- 1.pool.ntp.org

# Cron configuration - less frequent for dev

backup_schedule: "0 4 * * *" # Daily at 4 AM

log_rotation_schedule: "0 5 * * *" # Daily at 5 AM

# Azure Monitor configuration

azure_monitor_enabled: true

# Development specific settings

debug_mode: true

log_level: "debug"

Staging Environment Configuration

Staging environments mirror production configurations for accurate testing, create environments/staging/terraform.tfvars:

# Staging Environment Configuration

resource_group_name = "rg-terraform-state-staging"

environment = "staging"

location = "East US"

vm_name = "staging-vm"

vm_size = "Standard_B1s" # You would probably want to use a more powerful class of VM here, but this is a tutorial.

admin_username = "azureuser"

ssh_allowed_cidr = "0.0.0.0/8" # You would want to make this more restrictive

vnet_name = "staging-vnet"

vnet_address_space = "10.1.0.0/16"

subnet_name = "staging-subnet"

subnet_prefix = "10.1.1.0/24"

budget_amount = 100

budget_threshold_percentage = 70

alert_emails = ["[email protected]"]

ssh_public_key_path = "keys/id_rsa.pub"Staging Ansible variables balance security with testing requirements, create environments/staging/ansible_vars.yml:

# Staging environment variables

environment_name: "staging"

ssh_port: 22

fail2ban_maxretry: 3

fail2ban_bantime: 3600

fail2ban_findtime: 600

# System configuration

timezone: "UTC"

ntp_servers:

- 0.pool.ntp.org

- 1.pool.ntp.org

- 2.pool.ntp.org

# Cron configuration

backup_schedule: "0 3 * * *" # Daily at 3 AM

log_rotation_schedule: "0 4 * * *" # Daily at 4 AM

# Azure Monitor configuration

azure_monitor_enabled: true

# Staging specific settings

debug_mode: false

log_level: "info"Production Environment Configuration

Production environments enforce strict security and performance standards, create environments/production/terraform.tfvars:

# Production Environment Configuration

resource_group_name = "rg-terraform-state-production"

environment = "production"

location = "East US" # Different region for production

vm_name = "prod-vm"

vm_size = "Standard_B1s" # Larger instance for production

admin_username = "azureuser"

ssh_allowed_cidr = "0.0.0.0/0" # Here you would want very restrictive access

vnet_name = "prod-vnet"

vnet_address_space = "10.2.0.0/16"

subnet_name = "prod-subnet"

subnet_prefix = "10.2.1.0/24"

budget_amount = 200

budget_threshold_percentage = 60

alert_emails = ["[email protected]", "[email protected]"]

ssh_public_key_path = "keys/id_rsa.pub"Production Ansible variables implement maximum security hardening, create environments/production/ansible_vars.yml:

# Production environment variables

environment_name: "production"

ssh_port: 22

fail2ban_maxretry: 2 # Strict for production

fail2ban_bantime: 7200 # Longer ban time for production

fail2ban_findtime: 300

# System configuration

timezone: "UTC"

ntp_servers:

- 0.pool.ntp.org

- 1.pool.ntp.org

- 2.pool.ntp.org

- 3.pool.ntp.org

# Cron configuration

backup_schedule: "0 2 * * *" # Daily at 2 AM

log_rotation_schedule: "0 3 * * *" # Daily at 3 AM

# Azure Monitor configuration

azure_monitor_enabled: true

# Production specific settings

debug_mode: false

log_level: "warn"

GitHub Actions CI/CD Pipelines

GitHub Actions provides a flexible, cloud-native platform for defining CI/CD pipelines as code. Workflows are triggered by repository events, such as pull requests, merges, or manual dispatch, and execute jobs in isolated runners.

Each workflow can orchestrate infrastructure provisioning, configuration management, testing, and deployment steps, while securely integrating with Azure using OpenID Connect (OIDC) for passwordless authentication.

Key benefits of using GitHub Actions for CI/CD in projects include:

- Declarative Workflow as Code: All pipeline logic is version-controlled in YAML files, ensuring transparency and repeatability.

- Multi-Environment Support: Separate workflows and environment-specific variables enable safe deployments to development, staging, and production.

- Integrated Security: Built-in secrets management, OIDC authentication, and automated security scanning help enforce enterprise security standards.

- Approval Gates and Promotion: Manual approval steps and environment protection rules ensure that only validated changes reach production.

- Basic Testing: Automated linting, validation, integration, and security tests run at every stage to catch issues early.

- Scalability and Auditability: All workflow runs are logged and auditable, supporting compliance and team collaboration.

By leveraging GitHub Actions, teams can achieve reliable, automated, and secure infrastructure deployments that scale with organizational needs.

Let’s start by creating our first CI/CD workflow, PR Validation, now.

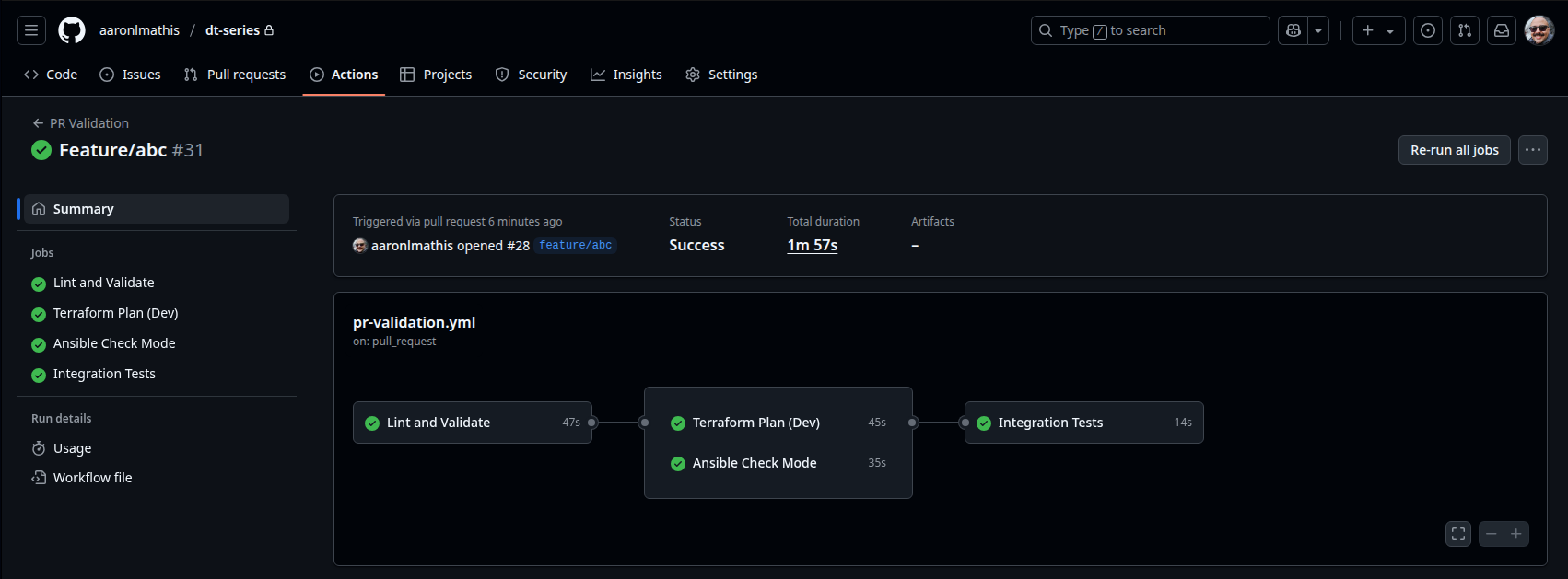

Pull Request Validation Workflow

The PR validation workflow is a critical part of the CI/CD process that runs automatically on every pull request targeting the develop branch. Its main purpose is to ensure that any proposed changes to infrastructure or configuration code meet quality, security, and integration standards before merging. This workflow performs several key checks:

- Linting and Validation: It checks Terraform formatting, validates Terraform and Ansible configurations, runs Ansible linting, and performs YAML linting to catch syntax errors and enforce best practices.

- Terraform Plan: It initializes Terraform with the development environment configuration and generates a plan, allowing reviewers to see what infrastructure changes would occur if the PR is merged.

- Ansible Check Mode: It runs Ansible playbooks in dry-run mode using a mock inventory, ensuring that configuration changes are idempotent and safe to apply.

- Integration Tests: It executes Python-based infrastructure tests to verify that all required environment files exist and are correctly formatted.

By automating these checks, the workflow helps prevent misconfigurations, enforces code quality, and reduces the risk of introducing errors into the main codebase. Only PRs that pass all validation steps can be safely merged, supporting a robust and secure infrastructure-as-code pipeline.

Create .github/workflows/pr-validation.yml:

name: 'PR Validation'

on:

pull_request:

branches: [ develop ]

paths:

- 'provisioning/**'

- 'configuration-management/**'

- 'environments/**'

# ── global defaults ───────────────────────────────────────────────────────────

env:

ARM_USE_OIDC: true

ARM_USE_CLI: false

ENVIRONMENT: dev

permissions:

id-token: write

contents: read

pull-requests: write

# ──────────────────────────────────────────────────────────────────────────────

jobs:

# 1 ────────────────── Lint & static validation ────────────────────────────────

lint-validate:

name: 'Lint and Validate'

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.12.2

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install Ansible

run: |

python -m pip install --upgrade pip

pip install ansible ansible-lint yamllint

# Terraform Validation

- name: Terraform fmt check

run: |

cd provisioning

terraform fmt -check

continue-on-error: true

- name: Terraform Init (validation only)

run: |

cd provisioning

cp ../environments/dev/terraform.tfvars .

cp ../environments/dev/backend.tf .

terraform init -backend=false

- name: Terraform Validate

run: |

cd provisioning

terraform validate

# Ansible Validation

- name: Ansible Lint

run: |

cd configuration-management

ansible-lint site.yml

continue-on-error: true

- name: YAML Lint

run: |

yamllint -d relaxed configuration-management/

continue-on-error: true

- name: Ansible Syntax Check

run: |

cd configuration-management

# Create temporary inventory for syntax check

mkdir -p temp_inventory

cat > temp_inventory/hosts.yml << EOF

all:

children:

azure_vms:

hosts:

test-vm:

ansible_host: 127.0.0.1

ansible_user: testuser

ansible_python_interpreter: /usr/bin/python3

EOF

ansible-playbook --syntax-check site.yml -i temp_inventory/hosts.yml

# 2 ────────────────── Terraform plan against Dev backend ──────────────────────

terraform-plan:

name: 'Terraform Plan (Dev)'

runs-on: ubuntu-latest

needs: lint-validate

env:

ARM_CLIENT_ID: ${{ secrets.AZURE_CLIENT_ID }}

ARM_TENANT_ID: ${{ secrets.AZURE_TENANT_ID }}

ARM_SUBSCRIPTION_ID: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Azure Login via OIDC

uses: azure/login@v1

with:

client-id: ${{ env.ARM_CLIENT_ID }}

tenant-id: ${{ env.ARM_TENANT_ID }}

subscription-id: ${{ env.ARM_SUBSCRIPTION_ID }}

- name: Setup Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.12.2

- name: Setup SSH Keys

run: |

mkdir -p ~/.ssh provisioning/keys

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

# Generate public key from private key

ssh-keygen -y -f ~/.ssh/id_rsa > provisioning/keys/id_rsa.pub

- name: Copy environment configuration

run: |

cp environments/${{ env.ENVIRONMENT }}/terraform.tfvars provisioning/

cp environments/${{ env.ENVIRONMENT }}/backend.tf provisioning/

- name: Terraform Init + Plan

run: |

cd provisioning

terraform init

terraform plan -var-file=terraform.tfvars

# 3 ────────────────── Ansible dry‑run (check mode) ────────────────────────────

ansible-check:

name: 'Ansible Check Mode'

runs-on: ubuntu-latest

needs: lint-validate

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install Ansible

run: |

python -m pip install --upgrade pip

pip install ansible

- name: Install Ansible collections

run: |

cd configuration-management

ansible-galaxy collection install -r requirements.yml

- name: Create mock inventory

run: |

mkdir -p configuration-management/inventory

cat > configuration-management/inventory/hosts.yml << EOF

all:

children:

azure_vms:

hosts:

mock-vm:

ansible_host: 127.0.0.1

ansible_user: azureuser

ansible_python_interpreter: /usr/bin/python3

EOF

- name: Ansible Check Mode (Dry Run)

run: |

cd configuration-management

cp ../environments/dev/ansible_vars.yml group_vars/azure_vms.yml

ansible-playbook -i inventory/hosts.yml site.yml --check --diff

continue-on-error: true

# 4 ────────────────── Python integration tests (local) ────────────────────────

integration-tests:

name: 'Integration Tests'

runs-on: ubuntu-latest

needs: [terraform-plan, ansible-check]

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install test dependencies

run: |

pip install pytest pyyaml

- name: Run infrastructure tests

run: |

python -m pytest tests/test_infrastructure.py -v

Automated Deployment

Automated deployment workflows are a core part of modern CI/CD (Continuous Integration and Continuous Deployment) pipelines. They enable teams to automatically build, test, and deploy infrastructure or application changes whenever code is pushed or merged, reducing manual intervention and the risk of human error.

In the context of GitHub Actions, these workflows are defined as YAML files that specify a series of jobs and steps to execute in response to repository events, such as code pushes or pull requests. This automation ensures that deployments are consistent, repeatable, and can be audited, supporting best practices for DevOps and infrastructure as code.

Development Environment Deployment

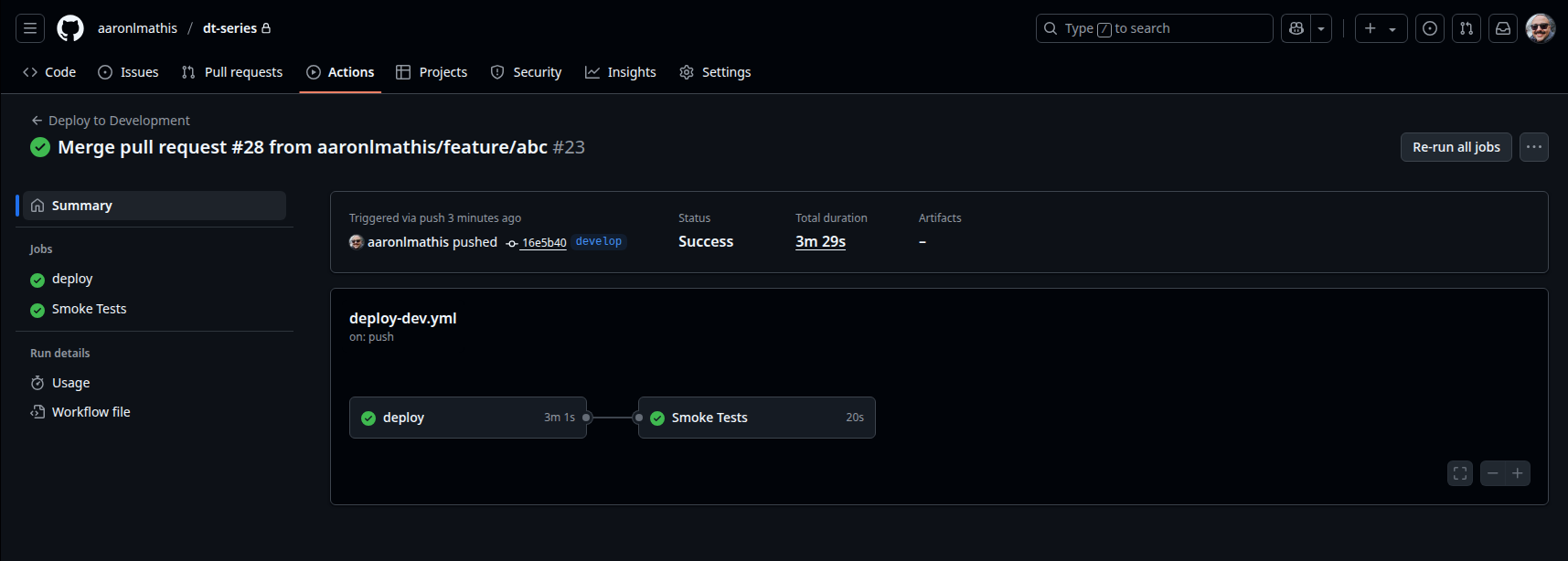

The following GitHub Actions workflow, deploy-development.yml, automates the deployment of infrastructure and configuration to the development environment whenever a pull request is merged with the develop branch or triggered manually. This workflow performs several key tasks:

- Azure Authentication: Logs into Azure using OpenID Connect (OIDC) and GitHub Actions secrets for secure, passwordless authentication.

- Terraform Deployment: Initializes Terraform with the development environment’s configuration files, applies infrastructure changes, and retrieves output values such as the VM’s public IP and name.

- Ansible Configuration: Sets up an Ansible inventory using the Terraform outputs, copies environment-specific variables, installs required Ansible collections, waits for the VM to become accessible via SSH, bootstraps Python on the VM, and applies the Ansible playbook to configure the VM.

- Smoke Testing: After deployment, runs basic smoke tests to verify connectivity, service availability, and correct configuration of the deployed VM, ensuring the environment is operational and ready for further development or testing.

This workflow ensures that every change to the development environment is automatically deployed, configured, and validated, supporting rapid iteration and early detection of issues in the CI/CD pipeline.

Create file .github/workflows/deploy-development.yml:

name: Deploy to Development

on:

push:

branches: [develop]

paths:

- 'provisioning/**'

- 'configuration-management/**'

- 'environments/dev/**'

workflow_dispatch:

# ── global defaults ───────────────────────────────────────────────────────────

env:

ARM_USE_OIDC: true

ARM_USE_CLI: false

ENVIRONMENT: dev # used for env‑specific files

permissions:

id-token: write

contents: read

# ──────────────────────────────────────────────────────────────────────────────

jobs:

# 1 ────────────────── Deploy infra & config ───────────────────────────────────

deploy:

name: 'Deploy Infrastructure & Configuration'

runs-on: ubuntu-latest

environment: development

env: # ARM vars once per job

ARM_CLIENT_ID: ${{ secrets.AZURE_CLIENT_ID }}

ARM_TENANT_ID: ${{ secrets.AZURE_TENANT_ID }}

ARM_SUBSCRIPTION_ID: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

outputs:

vm_ip: ${{ steps.tf_out.outputs.vm_ip }}

vm_name: ${{ steps.tf_out.outputs.vm_name }}

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0 # full history for tf/ansible versioning

- name: Azure login (OIDC)

uses: azure/login@v1

with:

client-id: ${{ env.ARM_CLIENT_ID }}

tenant-id: ${{ env.ARM_TENANT_ID }}

subscription-id: ${{ env.ARM_SUBSCRIPTION_ID }}

# Toolchain

- uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.12.2

- uses: actions/setup-python@v4

with:

python-version: "3.11"

- run: |

python -m pip install --upgrade pip

pip install ansible

name: Install Ansible

# SSH key for both Terraform (pub key) and Ansible/Smoke

- name: Prepare SSH key

run: |

mkdir -p ~/.ssh provisioning/keys

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

ssh-keygen -y -f ~/.ssh/id_rsa > provisioning/keys/id_rsa.pub

# ── Terraform ──────────────────────────────────────────────────────────

- name: Copy environment files

run: |

cp environments/${{ env.ENVIRONMENT }}/terraform.tfvars provisioning/

cp environments/${{ env.ENVIRONMENT }}/backend.tf provisioning/

- name: Terraform init ▪ plan ▪ apply

run: |

cd provisioning

terraform init

terraform plan -var-file=terraform.tfvars

terraform apply -auto-approve -var-file=terraform.tfvars

- name: Capture TF outputs

id: tf_out

run: |

cd provisioning

echo "vm_ip=$(terraform output -raw public_ip_address)" >> "$GITHUB_OUTPUT"

echo "vm_name=$(terraform output -raw vm_name)" >> "$GITHUB_OUTPUT"

# ── Ansible ────────────────────────────────────────────────────────────

- name: Create Ansible inventory

run: |

mkdir -p configuration-management/inventory

cat > configuration-management/inventory/hosts.yml << EOF

all:

children:

azure_vms:

hosts:

${{ steps.tf_out.outputs.vm_name }}:

ansible_host: ${{ steps.tf_out.outputs.vm_ip }}

ansible_user: azureuser

ansible_ssh_private_key_file: ~/.ssh/id_rsa

ansible_python_interpreter: /usr/bin/python3

vars:

ansible_ssh_common_args: '-o StrictHostKeyChecking=no'

environment_name: "${{ env.ENVIRONMENT }}"

EOF

env:

VM_IP: ${{ steps.tf_out.outputs.vm_ip }}

VM_NAME: ${{ steps.tf_out.outputs.vm_name }}

ENVIRONMENT: ${{ env.ENVIRONMENT }}

- name: Copy environment variables

run: |

cp environments/${{ env.ENVIRONMENT }}/ansible_vars.yml configuration-management/group_vars/azure_vms.yml

- name: Install Ansible collections

run: |

cd configuration-management

ansible-galaxy collection install -r requirements.yml

- name: Wait for SSH to be ready

run: |

for i in {1..20}; do

if ssh -o ConnectTimeout=10 -o StrictHostKeyChecking=no -o BatchMode=yes azureuser@${{ steps.tf_out.outputs.vm_ip }} 'echo "SSH is ready"'; then

echo "[SUCCESS] VM is accessible via SSH"

break

fi

if [ $i -eq 20 ]; then

echo "[FAIL] VM not ready after 10 minutes"

exit 1

fi

echo "Waiting for SSH... (attempt $i/20)"

sleep 30

done

- name: Run Ansible Playbook

run: |

cd configuration-management

ansible-playbook -i inventory/hosts.yml site.yml -v

# 2 ────────────────── Smoke tests ─────────────────────────────────────────────

smoke-tests:

name: 'Smoke Tests'

runs-on: ubuntu-latest

needs: deploy

env:

PUBLIC_IP_ADDRESS: ${{ needs.deploy.outputs.vm_ip }}

SSH_USER: azureuser

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Add SSH key

run: |

mkdir -p ~/.ssh

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

- name: Install system packages

run: sudo apt-get update && sudo apt-get install -y netcat-openbsd

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install test dependencies

run: |

pip install "pytest==8.4.1"

- name: Run smoke tests

run: |

cd tests

python -m pytest test_smoke.py -v

- name: Basic deployment verification

run: |

echo "[PASS] Development environment deployed successfully"

echo "[PASS] Infrastructure provisioned"

echo "[PASS] Configuration applied"

echo "[PASS] Basic connectivity verified"

Staging Environment Deployment

The Deploy to Staging workflow automates the process of promoting infrastructure and configuration changes from development to the staging environment. It is triggered either manually or automatically after a successful development deployment. The workflow performs the following key actions:

- Checks Development Success: Ensures the development deployment completed successfully before proceeding.

- Deploys Infrastructure & Configuration: Provisions the staging environment using Terraform and applies configuration with Ansible, using staging-specific variables and settings.

- Runs Security & Firewall Tests: Executes automated security and firewall validation tests to verify that the staging environment meets security and compliance requirements.

- Requests Production Approval: After successful deployment and testing, it creates a GitHub issue to request manual approval for production deployment, summarizing the staging results and providing a clear promotion path.

This workflow enforces a controlled, auditable promotion process, ensuring only validated and secure changes reach staging and are eligible for production deployment.

Create .github/workflows/deploy-staging.yml:

name: 'Deploy to Staging'

on:

workflow_run:

workflows: ["Deploy to Development"]

types:

- completed

workflow_dispatch:

inputs:

ref:

description: 'The branch or commit SHA to deploy to staging'

required: true

default: 'develop'

# ── global defaults ───────────────────────────────────────────────────────────

env:

ARM_USE_OIDC: true

ARM_USE_CLI: false

ENVIRONMENT: staging

permissions:

id-token: write

contents: read

# ──────────────────────────────────────────────────────────────────────────────

jobs:

# 0 ───────────── Confirm Dev succeeded (skipped for manual dispatch) ──────────

check-dev-success:

name: 'Check Dev Deployment Success'

runs-on: ubuntu-latest

if: github.event.workflow_run.conclusion == 'success' || github.event_name == 'workflow_dispatch'

steps:

- name: Dev deployment successful

run: echo "Development deployment completed successfully, proceeding to staging"

# 1 ────────────────── Deploy infra & config to staging ────────────────────────

deploy:

name: 'Deploy Infrastructure & Configuration'

runs-on: ubuntu-latest

needs: check-dev-success

environment: staging

env:

ARM_CLIENT_ID: ${{ secrets.AZURE_CLIENT_ID }}

ARM_TENANT_ID: ${{ secrets.AZURE_TENANT_ID }}

ARM_SUBSCRIPTION_ID: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

outputs:

vm_ip: ${{ steps.terraform-outputs.outputs.vm_ip }}

vm_name: ${{ steps.terraform-outputs.outputs.vm_name }}

steps:

- name: Checkout code from the Dev run

uses: actions/checkout@v4

with:

# pulls the exact commit SHA that kicked off Deploy to Development

ref: ${{ github.event.inputs.ref || github.event.workflow_run.head_sha }}

- name: Azure Login via OIDC

uses: azure/login@v1

with:

client-id: ${{ env.ARM_CLIENT_ID }}

tenant-id: ${{ env.ARM_TENANT_ID }}

subscription-id: ${{ env.ARM_SUBSCRIPTION_ID }}

- name: Setup Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.12.2

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install Ansible

run: |

python -m pip install --upgrade pip

pip install ansible

- name: Setup SSH Keys

run: |

mkdir -p ~/.ssh provisioning/keys

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

# Generate public key from private key

ssh-keygen -y -f ~/.ssh/id_rsa > provisioning/keys/id_rsa.pub

# ── Terraform ──────────────────────────────────────────────────────────

- name: Copy environment configuration

run: |

cp environments/${{ env.ENVIRONMENT }}/terraform.tfvars provisioning/

cp environments/${{ env.ENVIRONMENT }}/backend.tf provisioning/

- name: Terraform Init

run: |

cd provisioning

terraform init

terraform plan -var-file=terraform.tfvars

terraform apply -auto-approve -var-file=terraform.tfvars

- name: Get Terraform Outputs

id: terraform-outputs

run: |

cd provisioning

echo "vm_ip=$(terraform output -raw public_ip_address)" >> $GITHUB_OUTPUT

echo "vm_name=$(terraform output -raw vm_name)" >> $GITHUB_OUTPUT

# ── Ansible ────────────────────────────────────────────────────────────

- name: Create Ansible inventory

run: |

mkdir -p configuration-management/inventory

cat > configuration-management/inventory/hosts.yml << EOF

all:

children:

azure_vms:

hosts:

${{ steps.terraform-outputs.outputs.vm_name }}:

ansible_host: ${{ steps.terraform-outputs.outputs.vm_ip }}

ansible_user: azureuser

ansible_ssh_private_key_file: ~/.ssh/id_rsa

ansible_python_interpreter: /usr/bin/python3

vars:

ansible_ssh_common_args: '-o StrictHostKeyChecking=no'

environment_name: "${{ env.ENVIRONMENT }}"

EOF

env:

VM_IP: ${{ steps.terraform-outputs.outputs.vm_ip }}

VM_NAME: ${{ steps.terraform-outputs.outputs.vm_name }}

ENVIRONMENT: ${{ env.ENVIRONMENT }}

- name: Copy group variables

run: |

cp environments/${{ env.ENVIRONMENT }}/ansible_vars.yml configuration-management/group_vars/azure_vms.yml

- name: Install Ansible collections

run: |

cd configuration-management

ansible-galaxy collection install -r requirements.yml

- name: Wait for SSH to be ready

run: |

for i in {1..20}; do

if ssh -o ConnectTimeout=10 -o StrictHostKeyChecking=no -o BatchMode=yes azureuser@${{ steps.terraform-outputs.outputs.vm_ip }} 'echo "SSH is ready"'; then

echo "[PASS] VM is accessible via SSH"

break

fi

if [ $i -eq 20 ]; then

echo "[FAIL] VM not ready after 10 minutes"

exit 1

fi

echo "Waiting for SSH... (attempt $i/20)"

sleep 30

done

- name: Run Ansible Playbook

run: |

cd configuration-management

ansible-playbook -i inventory/hosts.yml site.yml -v

# 2 ────────────────── Security & firewall tests ──────────────────────────────

security-tests:

name: 'Security & Firewall Tests'

runs-on: ubuntu-latest

needs: deploy

env:

VM_IP: ${{ needs.deploy.outputs.vm_ip }}

VM_NAME: ${{ needs.deploy.outputs.vm_name }}

ENVIRONMENT: staging

steps:

- name: Checkout code from the Dev run

uses: actions/checkout@v4

with:

# pulls the exact commit SHA that kicked off Deploy to Development

ref: ${{ github.event.workflow_run.head_sha }}

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install test dependencies

run: |

pip install pytest testinfra pyyaml

- name: Setup SSH key for tests

run: |

mkdir -p ~/.ssh

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

- name: Run security tests

run: |

cd tests

python -m pytest test_security.py -v

- name: Additional security verification

run: |

echo "[PASS] Security scans completed"

echo "[PASS] Firewall rules verified"

echo "[PASS] Access controls validated"

echo "[PASS] Vulnerability assessment passed"

Production Environment Deployment

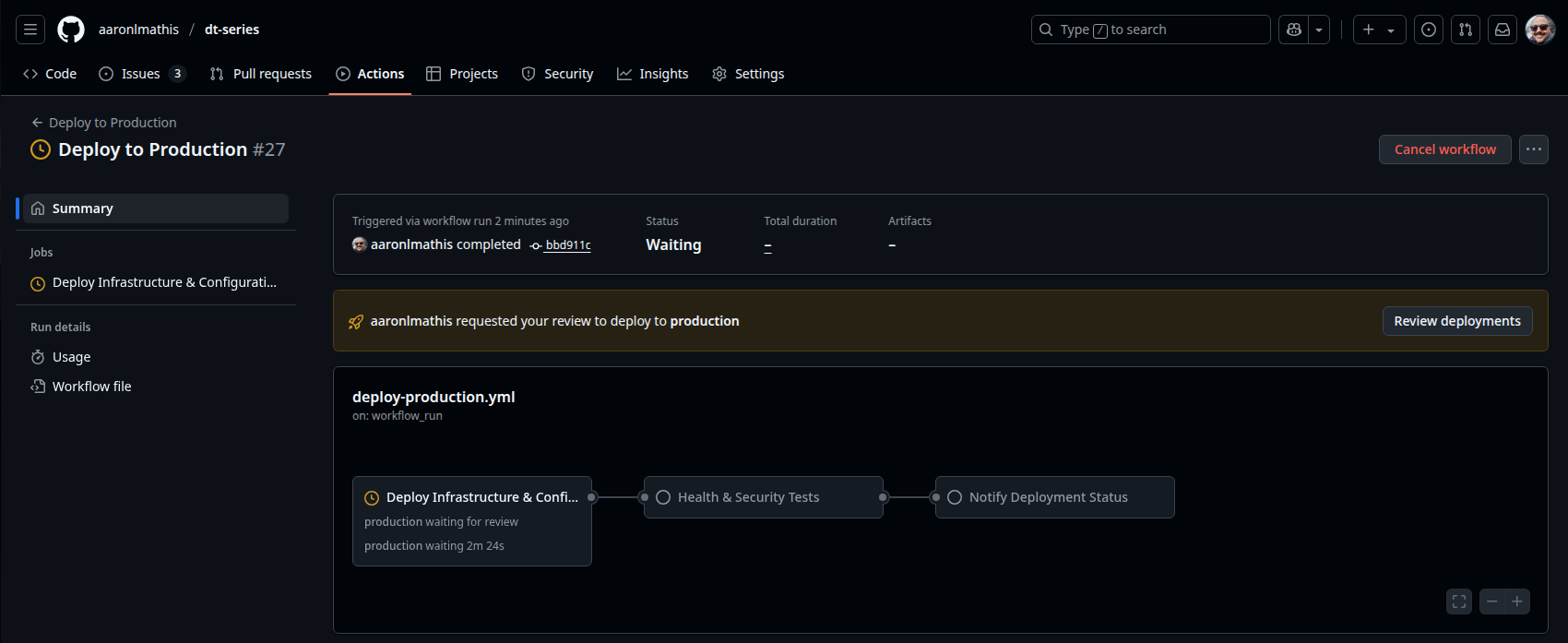

The Deploy to Production workflow automates the final and most critical stage of the CI/CD pipeline, ensuring that only thoroughly tested and approved changes reach the production environment. This workflow is designed for maximum control, security, and auditability, and is triggered manually to enforce human oversight.

Key features of the production deployment workflow:

- Manual Approval Gate: The workflow begins with a manual approval job, requiring explicit confirmation before any changes are applied to production. This step enforces a separation of duties and ensures that only authorized personnel can trigger deployments.

- Infrastructure Provisioning: Runs

terraform init,terraform plan, andterraform applyto provision or update resources in Azure according to the latest, approved code. - Configuration Management: After infrastructure is provisioned, the workflow generates an Ansible inventory based on Terraform outputs and applies the production Ansible playbook to configure VMs with hardened security and operational settings.

- Health Checks and Security Verification: Executes a dedicated job to run health checks and security validation tests using Python and pytest, confirming that the deployed infrastructure meets all operational, security, and compliance requirements.

- Deployment Notification: Upon completion, the workflow posts a deployment status notification to GitHub by creating an issue, providing a clear audit trail and visibility for stakeholders.

This workflow enforces best practices for production deployments by combining automation with manual controls, validation, and transparent reporting. It minimizes the risk of production outages, ensures compliance, and provides confidence that only validated, secure, and approved changes are released to end users.

Create .github/workflows/deploy-production.yml:

name: Deploy to Production

on:

workflow_run: # ← fired by the staging pipeline

workflows: ["Deploy to Staging"]

types: [completed]

workflow_dispatch:

inputs:

ref:

description: 'The branch or commit SHA to deploy to production'

required: true

env:

ARM_USE_OIDC: true

ARM_USE_CLI: false

ENVIRONMENT: production # used by TF / Ansible paths

permissions:

id-token: write

contents: read

issues: write # needed by notification step

# ──────────────────────────────────────────────────────────────────────────────

jobs:

# 1 ────────────────── Deploy infrastructure & config ─────────────────────────

deploy:

name: Deploy Infrastructure & Configuration

if: github.event_name == 'workflow_dispatch' ||

github.event.workflow_run.conclusion == 'success'

runs-on: ubuntu-latest

environment:

name: production # ← reviewers gate lives here

env:

ARM_CLIENT_ID: ${{ secrets.AZURE_CLIENT_ID }}

ARM_TENANT_ID: ${{ secrets.AZURE_TENANT_ID }}

ARM_SUBSCRIPTION_ID: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

outputs:

vm_ip: ${{ steps.tf_out.outputs.vm_ip }}

vm_name: ${{ steps.tf_out.outputs.vm_name }}

steps:

- uses: actions/checkout@v4

with:

# Use the dispatch input if available, otherwise use the SHA from the completed staging run

ref: ${{ github.event.inputs.ref || github.event.workflow_run.head_sha }}

- name: Azure login (OIDC)

uses: azure/login@v1

with:

client-id: ${{ env.ARM_CLIENT_ID }}

tenant-id: ${{ env.ARM_TENANT_ID }}

subscription-id: ${{ env.ARM_SUBSCRIPTION_ID }}

- name: Set up tooling

uses: actions/setup-python@v4

with:

python-version: "3.11"

- name: Install Ansible

run: |

python -m pip install --upgrade pip

pip install ansible

- name: Set up Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.12.2

- name: Prepare SSH key

run: |

mkdir -p ~/.ssh provisioning/keys

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

ssh-keygen -y -f ~/.ssh/id_rsa > provisioning/keys/id_rsa.pub

# ── Terraform ──────────────────────────────────────────────────────────

- name: Copy environment files

run: |

cp environments/${{ env.ENVIRONMENT }}/terraform.tfvars provisioning/

cp environments/${{ env.ENVIRONMENT }}/backend.tf provisioning/

- name: Terraform init / plan / apply

run: |

cd provisioning

terraform init

terraform plan -var-file=terraform.tfvars

terraform apply -auto-approve -var-file=terraform.tfvars

- name: Capture TF outputs

id: tf_out

run: |

cd provisioning

echo "vm_ip=$(terraform output -raw public_ip_address)" >> "$GITHUB_OUTPUT"

echo "vm_name=$(terraform output -raw vm_name)" >> "$GITHUB_OUTPUT"

# ── Ansible ────────────────────────────────────────────────────────────

- name: Build inventory

run: |

mkdir -p configuration-management/inventory

cat > configuration-management/inventory/hosts.yml << EOF

all:

children:

azure_vms:

hosts:

${TF_VM_NAME}:

ansible_host: ${TF_VM_IP}

ansible_user: azureuser

ansible_ssh_private_key_file: ~/.ssh/id_rsa

ansible_python_interpreter: /usr/bin/python3

vars:

ansible_ssh_common_args: '-o StrictHostKeyChecking=no'

environment_name: '${ENVIRONMENT}'

EOF

env:

TF_VM_IP: ${{ steps.tf_out.outputs.vm_ip }}

TF_VM_NAME: ${{ steps.tf_out.outputs.vm_name }}

ENVIRONMENT: ${{ env.ENVIRONMENT }}

- name: Copy group vars

run: |

cp environments/${{ env.ENVIRONMENT }}/ansible_vars.yml \

configuration-management/group_vars/azure_vms.yml

- name: Install collections

run: |

cd configuration-management

ansible-galaxy collection install -r requirements.yml

- name: Wait for SSH

run: |

for i in {1..10}; do

if ssh -o BatchMode=yes -o StrictHostKeyChecking=no \

-o ConnectTimeout=10 azureuser@${{ steps.tf_out.outputs.vm_ip }} \

"echo ok" >/dev/null 2>&1; then

echo "VM ready"; break

fi

echo "Waiting… ($i/10)"; sleep 30

done

- name: Apply playbook

run: |

cd configuration-management

ansible-playbook -i inventory/hosts.yml site.yml -v

# 3 ────────────────── Health & security tests ────────────────────────────────

health-checks:

name: 'Health & Security Tests'

needs: deploy

if: ${{ needs.deploy.result == 'success' }}

runs-on: ubuntu-latest

env:

VM_IP: ${{ needs.deploy.outputs.vm_ip }}

VM_NAME: ${{ needs.deploy.outputs.vm_name }}

ENVIRONMENT: production

steps:

- uses: actions/checkout@v4

- uses: actions/setup-python@v4

with:

python-version: "3.11"

- run: pip install pytest testinfra pyyaml

- name: Add SSH key

run: |

mkdir -p ~/.ssh

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_rsa

chmod 600 ~/.ssh/id_rsa

- name: Run test suites

run: |

cd tests

pytest -v test_health.py test_security.py

# 4 ────────────────── Notify result ──────────────────────────────────────────

notify-deployment:

name: 'Notify Deployment Status'

runs-on: ubuntu-latest

needs: [deploy, health-checks]

if: ${{ always() && needs.deploy.result != 'skipped' }}

steps:

- name: Deployment notification

uses: actions/github-script@v7

with:

script: |

const deploymentStatus = '${{ needs.health-checks.result }}';

const emoji = deploymentStatus === 'success' ? '[SUCCESS]' : '[FAILURE]';

const status = deploymentStatus === 'success' ? 'successful' : 'failed';

const message = `${emoji} Production deployment ${status}!

**Environment:** Production

**Commit:** ${context.sha.substring(0, 7)}

**Actor:** ${context.actor}

**Status:** ${deploymentStatus}

[View deployment details](${context.payload.repository.html_url}/actions/runs/${context.runId})`;

// Create a GitHub issue for deployment notification

await github.rest.issues.create({

owner: context.repo.owner,

repo: context.repo.repo,

title: `${emoji} Production Deployment ${status} - ${context.sha.substring(0, 7)}`,

body: message,

labels: ['production', 'deployment', 'notification']

});

console.log("Production deployment notification sent");

Note: This workflow is meant for demonstration and teaching purposes only. It should not be used in a production environment.

Developing a Testing Framework

Enterprise CI/CD requires comprehensive testing to ensure infrastructure reliability and security compliance. Your testing framework should validate infrastructure, deployments, security posture, and performance characteristics.

Best Practices for Developing a CI/CD Testing Framework

Building a robust testing framework for CI/CD pipelines is essential for ensuring reliability, security, and maintainability. Here are some best practices to follow:

- Test Early and Often: Integrate tests at every stage of the pipeline, on pull requests, merges, and deployments, to catch issues as soon as possible.

- Automate Everything: Automate all tests, including unit, integration, security, and compliance checks, to ensure consistency and reduce manual effort.

- Isolate Environments: Use separate environments (dev, staging, production) with environment-specific configurations and tests to prevent cross-contamination and ensure accurate validation.

- Enforce Idempotency: Ensure that infrastructure and configuration changes are idempotent, so repeated runs yield the same results without side effects.

- Comprehensive Coverage: Include tests for infrastructure validation, configuration correctness, security hardening, service health, and disaster recovery.

- Fail Fast: Configure pipelines to fail quickly on critical errors, preventing faulty changes from progressing further in the workflow.

- Use Realistic Test Data: Mirror production-like data and scenarios in staging to uncover issues that may not appear in development.

- Security as Code: Treat security and compliance checks as first-class citizens in your test suite, automating vulnerability scans and policy enforcement.

- Clear Reporting: Provide actionable, easy-to-understand test reports and logs to speed up troubleshooting and remediation.

- Version Control Tests: Store all test scripts and configurations in version control alongside your infrastructure code for traceability and collaboration.

- Continuous Improvement: Regularly review and update tests to cover new features, address regressions, and adapt to evolving requirements.

By following these practices, you can build a CI/CD testing framework that supports rapid, safe, and reliable infrastructure automation at scale.

Note: The testing framework and scripts provided here are simplified examples intended for tutorial purposes only. They are not production-ready and should be adapted and expanded to meet the specific requirements and security standards of your environment.

How the CI/CD Workflows and Testing Operate

Our CI/CD pipeline is designed for automation and quality assurance across all environments. Here’s how the process works:

1. Pull Request Validation:

When a developer opens a pull request (PR) to a feature branch, the Infrastructure Validation Tests (test_infrastructure.py) are automatically executed. These tests verify that all environment configuration files exist, are correctly formatted, and follow best practices for isolation and security.

2. Merge to Develop Branch:

If the PR is approved and merged into the develop branch, the deploy-development workflow is triggered. This workflow:

- Provisions infrastructure in the development environment using Terraform and Ansible.

- Runs smoke tests (

test_smoke.py) to ensure the VM is accessible, services are running, and firewall rules are correct.

3. Promotion to Staging:

If all development deployment and smoke tests pass, the deploy-staging workflow is automatically triggered. This workflow:

- Deploys the infrastructure and configuration to the staging environment.

- Runs basic security compliance tests (

test_security.py) to validate hardening, firewall, authentication, and system security settings.

4. Production Approval Gate:

If staging deployment and security tests pass, a manual approval gate is created. A message is sent to the users listed as an approved reviewer.

5. Production Deployment:

Upon approval, the deploy-production workflow is triggered. This workflow:

- Provisions and configures the production environment.

- Runs both security tests (

test_security.py) and health checks (test_health.py) to verify operational readiness, security compliance, and system health.

This automated workflow ensures that only validated, secure, and approved changes are promoted through each environment, with basic testing and manual controls at critical stages.

Pytest Configuration

Before we start writing our tests, let’s configure pytest. Create tests/pytest.ini:

[pytest]

# ── Paths ─────────────────────────────────────────────────────────────────────

testpaths =

tests

# ── Discovery patterns ────────────────────────────────────────────────────────

python_files =

test_*.py

python_functions =

test_*

python_classes =

Test*

# ── Global options ────────────────────────────────────────────────────────────

addopts =

-v

--tb=short

--strict-markers

--disable-warnings

minversion = 6.0

# ── Custom markers (keep in sync with your test modules) ──────────────────────

markers =

unit: Unit tests

integration: Integration tests

security: Security validation tests

performance: Performance tests

smoke: Smoke tests for production

dev: Development environment tests

staging: Staging environment tests

production: Production environment tests

# ── Warning filters ───────────────────────────────────────────────────────────

filterwarnings =

ignore::UserWarning

ignore::DeprecationWarning

A pytest configuration file (commonly named pytest.ini) is used to customize and control the behavior of the pytest testing framework. This file allows you to define test discovery patterns, set command-line options, configure output formatting, register custom markers for categorizing tests, and manage warnings or minimum version requirements.

By centralizing these settings, the pytest config ensures consistent test execution across different environments and team members, making it easier to maintain and scale your testing strategy in larger projects.

Infrastructure Validation Tests

The test_infrastructure.py script provides automated validation of your environment configuration files and enforces best practices for environment isolation and security. It uses Python’s pytest framework to perform the following checks:

- Existence of Configuration Files: Verifies that each environment (

dev,staging,production) has the requiredterraform.tfvars,backend.tf, andansible_vars.ymlfiles. - Terraform Variables Format: Ensures that all required Terraform variables (such as

resource_group_name,environment,location,vm_name,vm_size) are present in each environment’s.tfvarsfile. - Ansible Variables Format: Checks that each

ansible_vars.ymlcontains required variables likeenvironment_name,ssh_port,fail2ban_maxretry, andtimezone. - Environment Isolation: Confirms that resource group names and VM names are unique across environments, preventing accidental resource overlap.

- Production Security Settings: Validates that the production environment has stricter security settings than development (e.g., lower

fail2ban_maxretry, higherfail2ban_bantime, anddebug_modedisabled).

This script helps maintain consistency, enforce environment isolation, and ensure that security standards are met before infrastructure changes are deployed.

Create tests/test_infrastructure.py:

"""

Infrastructure validation tests

"""

import pytest

import os

import yaml

def test_environment_configs_exist():

"""Test that all environment configurations exist"""

environments = ['dev', 'staging', 'production']

for env in environments:

# Check Terraform configs

tfvars_path = f"environments/{env}/terraform.tfvars"

backend_path = f"environments/{env}/backend.tf"

ansible_vars_path = f"environments/{env}/ansible_vars.yml"

assert os.path.exists(tfvars_path), f"Missing {tfvars_path}"

assert os.path.exists(backend_path), f"Missing {backend_path}"

assert os.path.exists(ansible_vars_path), f"Missing {ansible_vars_path}"

def test_terraform_variables_format():

"""Test that Terraform variables are properly formatted"""

environments = ['dev', 'staging', 'production']

required_vars = [

'resource_group_name',

'environment',

'location',

'vm_name',

'vm_size'

]

for env in environments:

tfvars_path = f"environments/{env}/terraform.tfvars"

with open(tfvars_path, 'r') as f:

content = f.read()

for var in required_vars:

assert var in content, f"Missing required variable {var} in {tfvars_path}"

def test_ansible_variables_format():

"""Test that Ansible variables are properly formatted"""

environments = ['dev', 'staging', 'production']

required_vars = [

'environment_name',

'ssh_port',

'fail2ban_maxretry',

'timezone'

]

for env in environments:

ansible_vars_path = f"environments/{env}/ansible_vars.yml"

with open(ansible_vars_path, 'r') as f:

vars_data = yaml.safe_load(f)

for var in required_vars:

assert var in vars_data, f"Missing required variable {var} in {ansible_vars_path}"

def test_environment_isolation():

"""Test that environments have different resource names"""

environments = ['dev', 'staging', 'production']

resource_groups = []

vm_names = []

for env in environments:

tfvars_path = f"environments/{env}/terraform.tfvars"

with open(tfvars_path, 'r') as f:

content = f.read()

# Extract resource group name

for line in content.split('\n'):

if line.startswith('resource_group_name'):

rg_name = line.split('=')[1].strip().strip('"')

resource_groups.append(rg_name)

elif line.startswith('vm_name'):

vm_name = line.split('=')[1].strip().strip('"')

vm_names.append(vm_name)

# Ensure all resource groups are unique

assert len(set(resource_groups)) == len(resource_groups), "Resource groups must be unique across environments"

assert len(set(vm_names)) == len(vm_names), "VM names must be unique across environments"

def test_production_security_settings():

"""Test that production has stricter security settings"""

with open('environments/production/ansible_vars.yml', 'r') as f:

prod_vars = yaml.safe_load(f)

with open('environments/dev/ansible_vars.yml', 'r') as f:

dev_vars = yaml.safe_load(f)

# Production should have stricter fail2ban settings

assert prod_vars['fail2ban_maxretry'] <= dev_vars['fail2ban_maxretry'], \

"Production should have stricter fail2ban retry limit"

assert prod_vars['fail2ban_bantime'] >= dev_vars['fail2ban_bantime'], \

"Production should have longer ban times"

# Production should not have debug mode enabled

assert not prod_vars.get('debug_mode', False), \

"Production should not have debug mode enabled"

if __name__ == "__main__":

pytest.main([__file__])

Development - Deployment Workflow Tests

The test_smoke.py script provides basic smoke tests to validate that a newly deployed VM is accessible and correctly configured after deployment. It uses Python’s pytest framework and standard libraries to perform the following checks:

- VM Connectivity: Verifies that the VM responds to ICMP ping, ensuring network reachability.

- SSH Connectivity: Attempts to establish an SSH connection to the VM, retrying several times if necessary, to confirm that SSH access is available.

- Required Services: Checks that essential services (such as

sshd,fail2ban,chronyd, andufw) are running on the VM. - Firewall Rules: Ensures that the SSH port (22) is open and accessible, while confirming that unnecessary ports (e.g., 8080) are closed.

These smoke tests provide a fast, automated way to catch basic deployment or configuration errors before running more comprehensive or environment-specific tests. If any of these checks fail, it indicates a fundamental problem with the deployment that should be addressed before proceeding.

Create tests/test_smoke.py:

"""

Basic smoke tests for deployment validation

"""

from pathlib import Path

import os

import subprocess

import time

import pytest

# --------------------------------------------------------------------------- #

# Configuration

# --------------------------------------------------------------------------- #

TF_DIR = Path(os.getenv("TF_DIR", "../provisioning")).resolve()

SSH_USER = os.getenv("SSH_USER", "azureuser")

SSH_KEY = os.getenv("SSH_KEY", str(Path.home() / ".ssh/id_rsa"))

VM_IP = None # cached after first lookup

def _run(cmd: list[str], **kwargs) -> subprocess.CompletedProcess:

"""Shortcut around subprocess.run with sane defaults."""

return subprocess.run(

cmd,

capture_output=True,

text=True,

check=False,

**kwargs,

)

def get_vm_ip() -> str:

"""Return the VM’s public IP (read once from Terraform)."""

# Prefer the value handed over by the deploy job

ip_env = os.getenv("PUBLIC_IP_ADDRESS")

if ip_env:

return ip_env

# Fallback to reading terraform output locally

global VM_IP

if VM_IP:

return VM_IP

result = _run(

["terraform", "output", "-raw", "public_ip_address"],

cwd=TF_DIR,

)

if result.returncode != 0:

pytest.fail(f"Unable to obtain VM IP from Terraform:\n{result.stderr}")

VM_IP = result.stdout.strip()

return VM_IP

def ssh_exec(remote_cmd: str) -> subprocess.CompletedProcess:

"""Execute a command on the VM via SSH and return CompletedProcess."""

return _run(

[

"ssh",

"-i", SSH_KEY,

"-o", "ConnectTimeout=10",

"-o", "StrictHostKeyChecking=no",

f"{SSH_USER}@{get_vm_ip()}",

remote_cmd,

]

)

# --------------------------------------------------------------------------- #

# Tests

# --------------------------------------------------------------------------- #

def test_vm_connectivity():

"""VM must respond to ping."""

ip = get_vm_ip()

print("[TEST] Testing VM connectivity (TCP-port 22)…")

result = _run(["nc", "-zvw", "5", ip, "22"])

assert result.returncode == 0, f"TCP port 22 unreachable on {ip}"

print("[PASS] VM is reachable")

def test_ssh_connectivity():

"""SSH must succeed."""

print("[TEST] Testing SSH connectivity…")

for _ in range(6): # retry ~30 s total

result = ssh_exec("echo SSH-OK")

if result.returncode == 0 and "SSH-OK" in result.stdout:

print("[PASS] SSH connection successful")

return

time.sleep(5)

pytest.fail(f"SSH failed after retries:\n{result.stderr}")

def test_required_services():

"""Expected services must be active."""

print("[TEST] Testing required services…")

required = ["sshd", "fail2ban", "chronyd", "ufw"]

for svc in required:

result = ssh_exec(f"systemctl is-active {svc}")

assert result.returncode == 0 and result.stdout.strip() == "active", (

f"Service {svc} is NOT running"

)

print(f"[PASS] Service {svc} is running")

def test_firewall_rules():

"""SSH port open; example port 8080 closed."""

ip = get_vm_ip()

print("[TEST] Testing firewall configuration…")

# Port 22 open

assert _run(["nc", "-zvw", "5", ip, "22"]).returncode == 0, "Port 22 unreachable"

print("[PASS] SSH port 22 is open")

# Port 8080 closed

assert _run(["nc", "-zvw", "5", ip, "8080"]).returncode != 0, "Port 8080 open"

print("[PASS] Unnecessary ports are closed")

if __name__ == "__main__":

pytest.main([__file__, "-v"])Staging - Security Compliance Tests

When deploying our infrastructure from develop to staging environments, we want to run automated security compliance testing to verify that all hardening measures are correctly applied. This ensures that the staging environment meets enterprise security standards before any changes are promoted to production. The test_security.py script is executed as part of the staging deployment workflow and performs security-related checks, including:

- SSH and authentication hardening: Verifies that password authentication is disabled, root login is prevented, and only secure protocols are allowed.

- Firewall configuration: Ensures that only required ports are open and that default-deny policies are enforced.

- Fail2ban and intrusion prevention: Confirms that fail2ban is running and properly configured to protect against brute-force attacks.

- System hardening: Checks that unnecessary services are disabled, critical files have correct permissions, and kernel parameters are set for security.

- User and account security: Validates that only authorized accounts exist, no default or empty passwords are present, and log files are protected.

- Network and logging: Ensures that network services are restricted and that logs are securely managed.

By running these automated tests in staging, we catch misconfigurations and security regressions early, ensuring that only compliant and hardened infrastructure is eligible for production deployment.

Create tests/test_security.py:

"""

Security validation tests

"""

import pytest

import testinfra

import yaml

import os

import time

def get_vm_ip(environment):

"""Get VM IP from inventory file"""

inventory_path = f"configuration-management/inventory/hosts.yml"

if not os.path.exists(inventory_path):

pytest.skip(f"Inventory file not found: {inventory_path}")

with open(inventory_path, 'r') as f:

inventory = yaml.safe_load(f)

vm_hosts = inventory['all']['children']['azure_vms']['hosts']

vm_name = list(vm_hosts.keys())[0]

vm_ip = vm_hosts[vm_name]['ansible_host']

return vm_ip, vm_name

@pytest.fixture(scope="module")

def vm_connection(request):

"""Create testinfra connection to VM"""

environment = request.config.getoption("--env", default="dev")

vm_ip, vm_name = get_vm_ip(environment)

connection_string = f"ssh://azureuser@{vm_ip}"

host = testinfra.get_host(connection_string)

# Wait for SSH to be available

max_retries = 30

for i in range(max_retries):

try:

host.run("echo 'SSH test'")

break

except Exception as e:

if i == max_retries - 1:

pytest.fail(f"Could not connect to VM after {max_retries} attempts: {e}")

time.sleep(10)

return host, vm_ip

def test_no_default_passwords(vm_connection):

"""Test that no default passwords are set"""

host, _ = vm_connection

# Check shadow file for password policies

shadow_file = host.file("/etc/shadow")

assert shadow_file.exists

# Root should be locked

root_entry = host.run("grep '^root:' /etc/shadow")

assert "!" in root_entry.stdout or "*" in root_entry.stdout, "Root account should be locked"

def test_ssh_security_configuration(vm_connection):

"""Test SSH security settings"""

host, _ = vm_connection

sshd_config = host.file("/etc/ssh/sshd_config")

config_content = sshd_config.content_string.lower()

security_tests = [

("passwordauthentication no", "Password authentication should be disabled"),

("permitrootlogin no", "Root login should be disabled"),

("protocol 2", "SSH Protocol 2 should be enforced"),

("x11forwarding no", "X11 forwarding should be disabled"),

("maxauthtries", "Max auth tries should be limited")

]

for setting, message in security_tests:

assert setting in config_content, message

def test_firewall_rules(vm_connection):

"""Test firewall configuration"""

host, _ = vm_connection

# Check UFW status

ufw_status = host.run("ufw status numbered")

assert "Status: active" in ufw_status.stdout, "UFW should be active"

# Should allow SSH

assert "22/tcp" in ufw_status.stdout or "ssh" in ufw_status.stdout.lower(), \

"SSH should be allowed through firewall"

# Should allow HTTP/HTTPS if web server is configured

status_output = ufw_status.stdout.lower()

# Check that default policies are restrictive

ufw_verbose = host.run("ufw status verbose")

assert "default: deny (incoming)" in ufw_verbose.stdout.lower(), \

"Default incoming policy should be deny"

def test_fail2ban_jails(vm_connection):

"""Test fail2ban jail configuration"""

host, _ = vm_connection

# Check fail2ban status

fail2ban_status = host.run("fail2ban-client status")

assert fail2ban_status.exit_status == 0, "fail2ban should be running"

# Check SSH jail specifically

ssh_jail = host.run("fail2ban-client status sshd")

assert ssh_jail.exit_status == 0, "SSH jail should be configured"

# Verify jail configuration

jail_conf = host.file("/etc/fail2ban/jail.local")

if jail_conf.exists:

config_content = jail_conf.content_string

assert "[sshd]" in config_content, "SSH jail should be configured"

def test_system_hardening(vm_connection):

"""Test system hardening measures"""

host, _ = vm_connection

# Check if unnecessary services are disabled

unnecessary_services = ['telnet', 'rsh', 'rlogin']

for service in unnecessary_services:

service_check = host.run(f"systemctl is-enabled {service} 2>/dev/null || echo 'not-found'")

assert "not-found" in service_check.stdout or "disabled" in service_check.stdout, \

f"Service {service} should not be enabled"

def test_file_permissions(vm_connection):

"""Test critical file permissions"""

host, _ = vm_connection

critical_files = [

("/etc/passwd", "644"),

("/etc/shadow", "640"),

("/etc/group", "644"),

("/etc/ssh/sshd_config", "600")

]

for file_path, expected_perms in critical_files:

file_obj = host.file(file_path)

if file_obj.exists:

actual_perms = oct(file_obj.mode)[-3:]

assert actual_perms == expected_perms, \

f"File {file_path} has permissions {actual_perms}, expected {expected_perms}"

def test_network_security(vm_connection):

"""Test network security configuration"""

host, _ = vm_connection

# Check for open ports

netstat_result = host.run("ss -tuln")

# Should have SSH open

assert ":22 " in netstat_result.stdout, "SSH port should be listening"

# Check for unnecessary open ports

dangerous_ports = [':23 ', ':135 ', ':139 ', ':445 ', ':3389 ']

for port in dangerous_ports:

assert port not in netstat_result.stdout, f"Dangerous port {port.strip()} should not be open"

def test_kernel_parameters(vm_connection):

"""Test kernel security parameters"""

host, _ = vm_connection

security_params = [

("net.ipv4.ip_forward", "0"),

("net.ipv4.conf.all.send_redirects", "0"),

("net.ipv4.conf.default.send_redirects", "0"),

("net.ipv4.conf.all.accept_redirects", "0"),

("net.ipv4.conf.default.accept_redirects", "0")

]

for param, expected_value in security_params:

sysctl_result = host.run(f"sysctl {param}")

if sysctl_result.exit_status == 0:

actual_value = sysctl_result.stdout.split('=')[-1].strip()

assert actual_value == expected_value, \

f"Kernel parameter {param} should be {expected_value}, got {actual_value}"

def test_user_accounts(vm_connection):

"""Test user account security"""

host, _ = vm_connection

# Check for users with UID 0 (should only be root)

uid_zero_users = host.run("awk -F: '$3==0 {print $1}' /etc/passwd")

uid_zero_list = uid_zero_users.stdout.strip().split('\n')

assert uid_zero_list == ['root'], f"Only root should have UID 0, found: {uid_zero_list}"

# Check for users with empty passwords

empty_passwords = host.run("awk -F: '$2==\"\" {print $1}' /etc/shadow")

assert empty_passwords.stdout.strip() == "", "No users should have empty passwords"

def test_log_file_permissions(vm_connection):

"""Test log file permissions"""

host, _ = vm_connection

log_files = [

"/var/log/auth.log",

"/var/log/syslog",

"/var/log/fail2ban.log"

]

for log_file in log_files:

file_obj = host.file(log_file)

if file_obj.exists:

# Log files should not be world-readable

perms = oct(file_obj.mode)

assert not (file_obj.mode & 0o004), f"Log file {log_file} should not be world-readable"

def test_cron_security(vm_connection):

"""Test cron security configuration"""

host, _ = vm_connection

# Check cron permissions

cron_files = ["/etc/crontab", "/etc/cron.deny"]

for cron_file in cron_files:

file_obj = host.file(cron_file)

if file_obj.exists: